Digital demand

The depressed economy has put hospital data centers in a difficult position. Capital funding has dwindled, but the need for information-based systems continues to grow. This has posed a dilemma for health facilities professionals: how to accommodate necessary growth and flexibility in their data center ecosystem with fewer and fewer available resources.

The depressed economy has put hospital data centers in a difficult position. Capital funding has dwindled, but the need for information-based systems continues to grow. This has posed a dilemma for health facilities professionals: how to accommodate necessary growth and flexibility in their data center ecosystem with fewer and fewer available resources.

The drive toward health information exchanges and the adoption of the electronic health record (EHR), as well as more data-driven applications such as digital imaging, the integrated operating room and patient bedside computing are creating a need to rethink data centers that support them. The data center connects and distributes critical data supporting patient applications. It is not something that can be ignored.

The seemingly practical answer is to build, but building or expanding a data center is not as easy as it sounds. Aside from the large capital output, there are issues associated with acquiring additional space to build. Furthermore, existing supporting infrastructure may not be sufficient to accommodate the data center's growth.

A larger data center footprint likely means more operational cost in terms of staffing and support systems. Additionally, the criticality of the data supporting patient care or medical research requires a highly available network with not only a robust data center, but also a powerful disaster recovery site that can be called into action quickly.

Clearly, embarking on a new construction project is out of reach for many health care facilities at this time. Health facilities professionals instead must become resourceful in their growth strategies by looking at maximizing the ability of existing infrastructure to meet expanding demand — before spending money they don't have. This requires developing a strategy to use and operate the existing data center more efficiently.

Getting started

There are several first steps to consider when developing a hospital data center strategy. They include:

Creating a baseline. The key to good planning is to take inventory of the types of systems and equipment already in place. The best way to do this is to commission a detailed study that inventories available space, computing assets (i.e., servers, network equipment and applications), and infrastructure (i.e., power and cooling) capacities.

Getting a read on the infrastructure is key because power and cooling really dictate how much capacity can be tapped to accommodate growth. Measuring and monitoring power usage within the data center is critical, and if it can be measured at the rack level, that provides even better data for evaluation. Rack temperatures also should be monitored to assess whether airflow distribution is working properly within the racks.

Identifying the target reliability factor. The Uptime Institute (www.uptimeinstitute.com), New York City, has defined four levels of criteria to classify the resiliency and availability of data center assets. Generally, the criteria outline increasing levels of power and cooling component redundancies as well as infrastructure pathway redundancy required within each defined level. The infrastructure design and cost factor among the four levels is not insignificant.

Usually, health care facilities aim for a Tier III level as a minimum. This means that power and cooling infrastructure needs to have N + 1 reliability. For cooling, it means an alternate source is available if the primary source fails. For power, it also means two sources — utility power and emergency generator power. In addition, two sources of circuits to each rack are required.

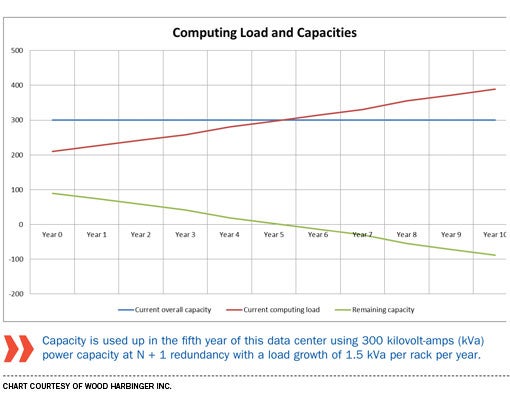

Predicting growth. Predicting accurate future load growth is next to impossible. If the facility has some knowledge of past trends over years of power usage on a rack basis, those can be used for a basis of assumption. If not, making an assumption on load growth per rack based on general knowledge of upcoming application deployments can be used.

It is more important for facilities professionals to understand that they are developing a model with defined underlying assumptions. The outcome of this is to take the measured baseline loads and incrementally add the expected load growth per year. The upper limit is defined by the total available capacity of the infrastructure while maintaining an

N + 1 redundancy factor.

The upper limit of capacity is defined by the total available power to the data center, as well as the total available cooling. These values are determined from the design drawings and documentation.

Monitoring and management

Once assets have been examined and estimates of future growth have been made, various software packages are available to help health facilities professionals decide how to redistribute and optimize power use. They may find that savings can be realized with small changes. The software includes:

Data center infrastructure management tools. This software serves to monitor the energy usage at the rack level as well as monitor the performance of the information technology (IT) systems. The importance of data center infrastructure management (DCIM) is that it provides real-time data and performance measurement. The actual demand load of the IT system may be more diverse than estimated, and these tools can be used to uncover additional capacity.

DCIM monitoring and tools also can predict peak and non-peak loads. Health facilities professionals may discover that some computing tasks are able to be scheduled to run at a certain time of the day when the full capacity of the system is not in use. Essentially, the workload is leveled out, making the most efficient use of the available infrastructure capacity.

Data center predictive modeling tools. This set of software tools goes hand in hand with DCIM tools. While DCIM collects data on IT system performance and energy usage, data center predictive modeling (DCPM) tools take that data as a baseline, allowing IT staff to see the impact of additional equipment on the IT environment.

The use of DCPM in tandem with DCIM allows health facilities professionals to stretch more of the capacity by taking advantage of real-time data and then overlaying scenarios of adding equipment to determine what can be added without touching existing infrastructure.

Optimizing power systems

If these strategies don't streamline data center systems to the desired level, health facilities professionals have more options available. They include:

Server virtualization. Most health care facilities are moving toward server virtualization, which is the practice of getting more computing ability out of a single hardware platform. This allows more applications to reside in a smaller footprint. However, this also increases the load density and necessary cooling per rack. This does not increase overall capacity, but may buy time before health facilities professionals eventually need to upsize their hospital's power and cooling infrastructure.

Redistributing noncritical applications. Health facilities professionals should consider hosting their noncritical applications off-site or moving them to a cloud computing vendor space. This can ease the burden of accommodating growth on-site and reserve available capacities for mission-critical applications. Any analysis of off-site hosting should include an assessment of the security issues, as well as return on investment. Many times, off-site hosting can prove to be a viable growth strategy option.

Uninterruptible power supply systems. Health facilities professionals should look at their existing uninterruptible power supply (UPS) equipment. Can it be upgraded or incrementally increased in capacity to drive more load? Is the load distributed evenly across all power legs on the UPS? An even load distribution will provide a more efficient-running UPS that may provide some additional capacity.

Cooling system action

Plenty also can be done on the cooling side to make the most of existing data center systems.

Most existing data centers use room-based precision cooling systems, which have an exterior condenser or drycooler and pump. In-room cooling equipment generally is used with under-floor supply air and passive return air. Precision-based cooling systems typically use inefficient electric heating elements to evaporate water to humidify and to reheat subcooled air to dehumidify.

This equipment typically is employed to maintain the room temperature at 70 degrees Fahrenheit, plus or minus 1 degree, and the room relative humidity at 50 percent, plus or minus 5 percent. Operating at higher inlet temperatures allows more system options, including fan systems that vary airflow in lieu of using electric reheat to dehumidify, desiccant dehumidification, adiabatic humidification, pumped refrigerant, in-row/in-rack cooling and containment methods.

Prior to incorporating any of these new system options, health facilities professionals should consider which of the following strategies can best maximize the energy savings in their existing data centers:

Separating class A1 IT equipment from late model and non-storage equipment. The American Society of Heating, Refrigerating and Air-Conditioning Engineers (ASHRAE) Technical Committee (TC) 9.9 2011 Thermal Guidelines for Data Processing Environments classifies equipment by the operating temperature and humidity limits.

Facilities professionals can convert all new IT equipment to Class A4 (with larger fans and heat sinks) and operate a new built environment closer to the ASHRAE allowable inlet temperature, which is 104 degrees Fahrenheit for that equipment. One option is installing the Class A4 equipment in the return air path of the Class A1 equipment. This uses one cooling system to cool two contained equipment areas in series. Energy savings should be weighed against increased failure rates and increased noise levels.

Employing ASHRAE expanded allowable temperature and humidity ranges on existing equipment. The ASHRAE recommended temperature and humidity ranges are similar to those traditionally employed. The expanded allowable range for typical IT equipment (Class A1 or A2) is an inlet temperature maximum of 89.6 degrees Fahrenheit to 95 degrees Fahrenheit.

The allowable humidity range for this equipment is 20 to 80 percent, which is an important departure from previous guidelines. Raising temperature alone has limited effectiveness at expanding capacity because the distribution systems in the equipment are sized for rejecting a maximum amount of total heat, regardless of the specified temperature difference. Expanding the operating humidity range can significantly reduce cooling loads.

Using expanded range to employ economizers. The economizer increases efficiency because the compressor does not have to operate when in economizer mode. Also, jurisdictional energy codes have been revised to require economizers at legacy data centers.

Airside economizers use outside air and blow it through the data center. Most existing data centers do not have available space to accommodate the additional ductwork and exterior louvers. Waterside economizers use a water-based system and multiple heat exchangers to reject heat to the ambient air. Each heat exchanger (coil, drycooler or cooling tower) makes the waterside economizer significantly less effective. However, the expanded range allows the use of waterside economizers in most areas of the United States.

Using expanded range to employ adiabatic cooling. Adiabatic cooling systems use outside air to cool water and air to the wet-bulb temperature. Depending on climate, the peak wet-bulb temperature can be 28 degrees Fahrenheit less than the peak dry-bulb temperature. Combining a lower temperature sink with the expanded range (higher source temperature) allows for several more compressorless options. Examples include direct evaporative cooling, indirect evaporative cooling or a cooling tower coil system.

More with less

While the strained economy has touched just about every aspect of the health care facility, it doesn't have to negatively impact plans to create a data center that runs efficiently and effectively. By taking inventory of existing assets, monitoring energy use and optimizing systems, an improved data center is possible without breaking the bank.

Tom Leonidas Jr., P.E., is executive vice president and David Derse, P.E., LEED AP BD+C, is an associate at Wood Harbinger Inc., Bellevue, Wash. They can be reached at tleonidas@woodharbinger.com and dderse@woodharbinger.com.

| Sidebar - Facility charts data center growth |

| A major East Coast academic medical facility recently took steps to chart a path for both present and future growth of its data. Driven in part by deployment of a new electronic health record, the hospital had to address how to deal with the burgeoning growth of not only patient records, but also medical research data. Strategy development began by taking inventory of servers and applications, along with the power draw within the data center. One of the first tasks of the hospital was to identify legacy applications that were no longer used. A strategy and plan of virtualizing applications on virtual servers also was developed that allowed the total physical server count to fall from around 750 to just 450. This reduced both the physical footprint and total overall energy consumption. The facility's strategy also included the use of an outside-hosted data center to off-load some applications that did not need to be housed on-site. Because the hospital did not have physical space to grow its data center — and to do so would have been cost-prohibitive — the use of an outside facility is allowing it to incrementally support growing needs as they arise. In the end, a number of strategies working together helped to create additional capacity for this institution and provided a three- to four-year return on investment. The key was planning and thinking about how all the different pieces work together to create substantial savings. |